ChatGPT, once known for having an answer to almost everything, is now taking a step back from sensitive topics. This change is part of OpenAI’s new policy that adds restrictions to reduce potential risks. But it raises an important question: are these rules meant to protect users or to protect the company itself?

Why ChatGPT No Longer Gives Personal Advice

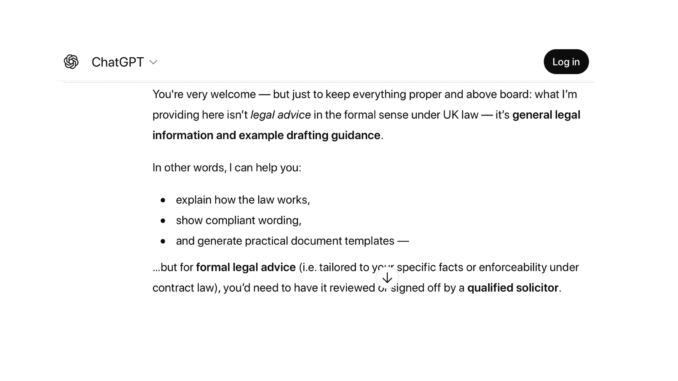

Since October 29, ChatGPT’s main role has changed. It will no longer offer personalized medical, legal, or financial advice. According to reports from NEXTA, the chatbot is now being treated as an educational tool rather than a personal consultant.

The reason behind this shift is growing concern about legal responsibility. Large tech companies want to avoid situations where they could be blamed or even sued for giving harmful or inaccurate information.

From now on, ChatGPT will focus on explaining general ideas and concepts. It will not provide specific details such as medication names or doses, legal document templates, or investment recommendations. Instead, users will be encouraged to consult real professionals like doctors, lawyers, or financial experts for personalized help. The goal is to make AI use safer and prevent serious mistakes that could affect people’s health, finances, or legal matters.

The Risks of Relying on AI for Important Decisions

In the past, many people used ChatGPT like an online doctor, typing in symptoms and waiting for quick answers. This often caused confusion and unnecessary worry. A simple headache could be described as anything from caffeine withdrawal to a serious illness. Someone with a cough might read about pneumonia or cancer when the real cause was just allergies. These situations showed why limits are necessary. AI cannot perform medical tests, physical exams, or take responsibility like a licensed professional can.

The same is true for emotional support. Some users have treated ChatGPT like a therapist. While it can suggest breathing exercises or mindfulness tips, it cannot truly understand feelings or recognize when someone is in distress. Real therapists are trained to help safely and follow strict rules to protect patients. If you or someone you know is struggling emotionally, please contact a local mental health support line.

AI also cannot replace experts in law or finance. It can explain general terms or concepts, but it cannot review your tax situation, check your finances, or confirm if a contract or will follows country law. Depending on it for those things can lead to serious mistakes.

Privacy is another issue. Sharing personal information like your income, bank details, or medical history with a chatbot is not always safe. That data could be stored or used for system training. Even asking for simple document drafts, such as a rental contract or will, can be risky if important details are missing or laws differ by region.

The main message is simple: ChatGPT can teach and inform, but real help still needs real experts.

Who Gains from ChatGPT’s New Rules?

These new restrictions benefit several groups.

First, users gain more safety because they are less likely to receive wrong or harmful advice about health, money, or legal matters.

Second, OpenAI protects itself by reducing the risk of legal issues and public criticism. This helps the company avoid lawsuits and maintain trust.

Finally, professionals such as doctors, lawyers, and financial advisors also benefit. The new limits ensure that AI stays in its place and does not interfere with work that requires real human judgment, experience, and responsibility.

AI can explain many things, but only people can truly understand. The future works best when machines inform us and humans lead the way.